Data analysis and cleansing are the most time consuming parts when you want to load source data, or prepare sampling data for data mining. You can write TSQL to find out distinct values or lengths of a column that are existing in the testing data. However, when you have millions of rows or more than thirty columns for analysis, TSQL scripts might take a whole day to get the answer. Since SQL Server 2008, SSIS has Data Profiling task that can conveniently help analyze the content and structure of data, and uncover patterns, inconsistencies, anomalies and even redundancies. Today we will give a brief demonstration about this hidden gem for data analysis.

How to view the result by Data Profiler Viewer?

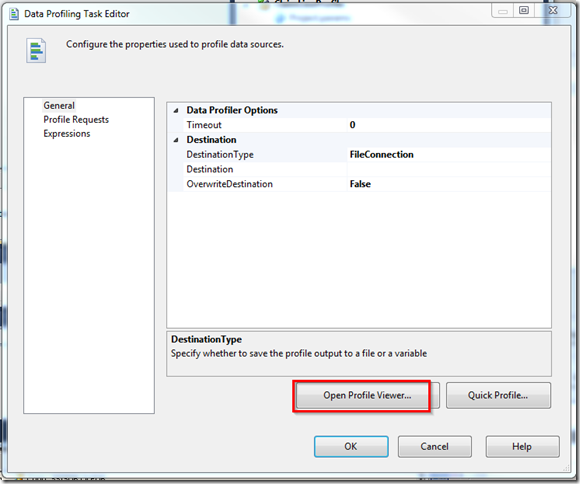

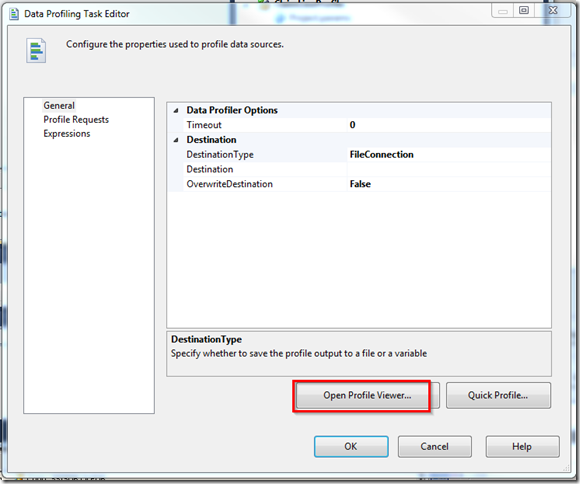

Data Profiler Viewer is used to view the generated profiler by data profiling task in SSIS. You create a new package and drag “Data Profiling Task” to the control flow pane as shown.

Double click the task, a new Data Profiling Task Editor window pops up and then click “Open Profile Viewer” button.

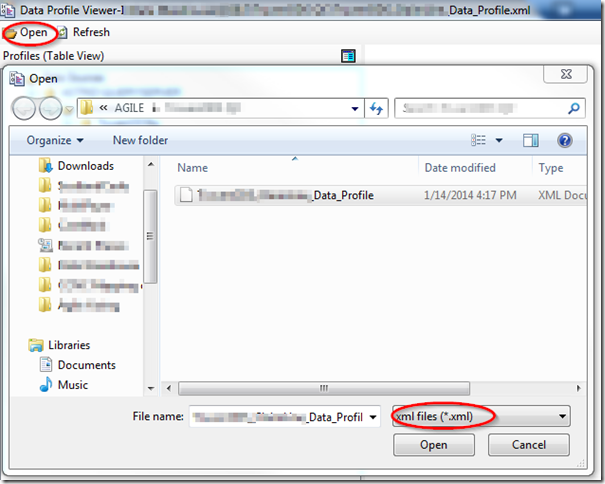

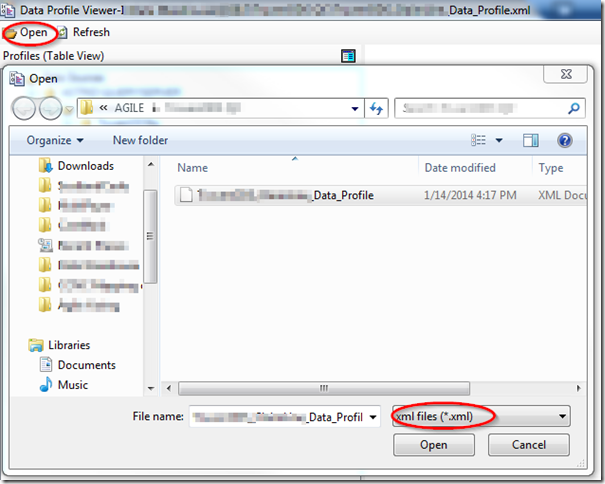

Click the open button at the upper left corner and locate the output profile file in xml format.

Types of profiles and statistics for analysis

Then you can explore the profile. Below only show four kinds of profiler but you can have eight kinds of profiles to request:

- Column Length Distribution

- Column Null Ratio

- Column Pattern

- Column Statistics including min, max, mean, standard deviation for each column.

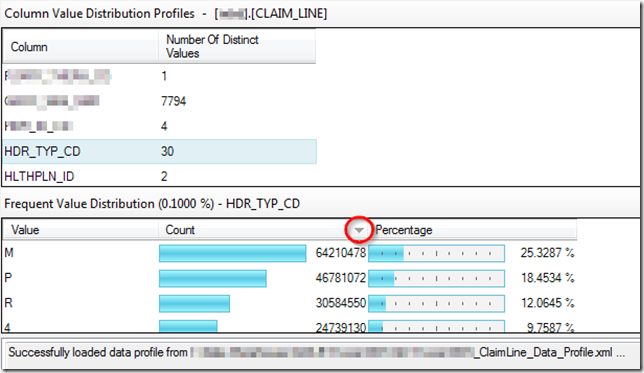

- Column Value Distribution including values, counts, percentages.

- Functional Dependency: fully or partially dependent of other column to check redundancy.

- Candidate Key: check which column might be a good candidate for a primary key or business key.

- Value Inclusion: check whether all values in a column exists in another table. It can be dimensions or lookup table.

Demo

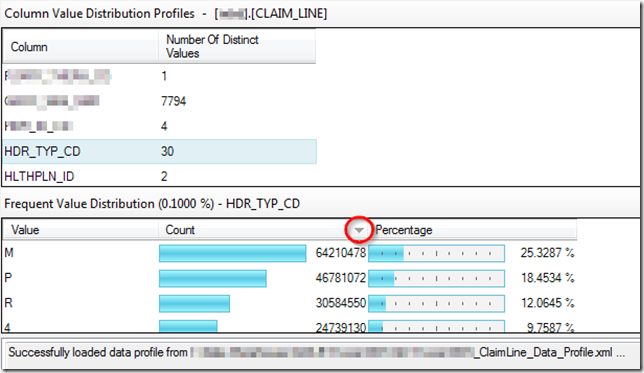

Let us take a look at the Column Value Distribution Profile first. Click each column on the top pane and then the lower pane will display Value, Count, Percentage for that column. You can sort by value, counts, or percentage by clicking the dropdown arrow at the right side of item. For this column, you can easily tell the top three kinds of type codes are M, P, R.

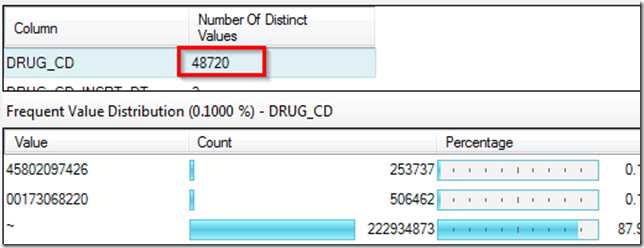

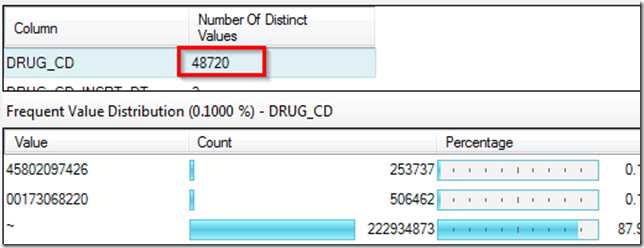

Note that it won’t be an exhausted list for all the values. Here is an example for drug code. It only listed three values in the lower pane but number of distinct values is 48720. So if the variation for column values is a lot but for some values, there are only a tiny percentage records, the profile won’t display those small percentage.

In summary, data profiles generated by Data Profiling Task of SSIS is very convenient to help you understand data, find patterns, derive data rules, detect the outliners of columns for data cleansing. You can even perform data profiling to test the foreign key relationship. If you would like to find out how to set up each kinds of profile requests, Jamie Thomson’s series of SSIS: Data Profiling Task are an awesome reference.

Reference: TechNet’s Data Profile Viewer