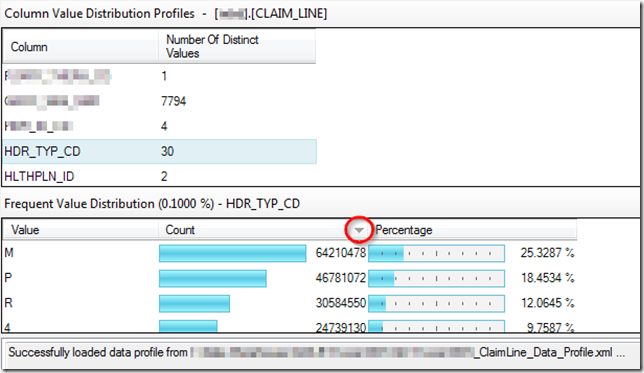

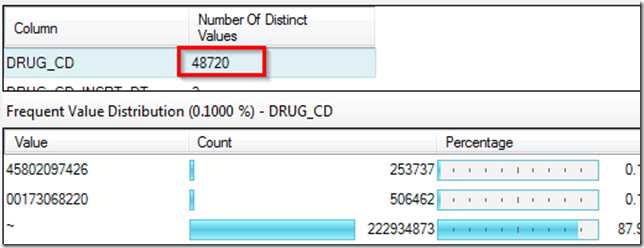

Using hash functions in sql server for incremental data loading has a big performance advantage when you have millions of rows to load, or have several dozens of columns to compare and make decision on whether to update, insert, or expire, as mentioned by Andy Leonard's Anatomy of an Incremental Load. Brett Flippin has introduced the way to calculate hash columns with SSIS’s Script Components in his article Speeding Up Incremental ETL Processes in SSIS by Using MD5 Hashes. Today I would like to demonstrate how to calculate hash columns by stored procedures. This stored procedure will automatically concatenate all columns except hash columns for given tables, and then generate a hashKey for each row. What you need to provide is the name of the table. So it saves the time and efforts to drag and draw columns for tables with different structures.

First let's start with the script to create our test database and destination table:

-- Create test database

use master

go

if exists(select name from sys.databases where name = 'DemoSQL2012')

drop database DemoSQL2012

go

create database DemoSQL2012

go

-- Create Contact Destination table

use DemoSQL2012

go

if exists(select name from sys.tables where name = 'hashTest')

drop table dbo.hashTest

go

CREATE TABLE [dbo].[hashTest](

[personId] [int] IDENTITY(1,1) NOT NULL,

[firstName] [varchar](10) NULL,

[lastName] [varchar](10) NULL,

[hashKey] [varbinary](256) NULL,

CONSTRAINT [PK_hashtest] PRIMARY KEY CLUSTERED

(

[personId] ASC

))

Insert INTO [dbo].[hashTest] ([firstName], LastName)

VALUES ('David', 'Smith'),

('Bob', 'Stones')

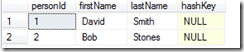

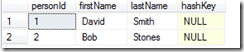

If you check the table dbo.hashTest, you will see that it has four columns and two records, with hashKey column set as NULL.

Create Stored Proc dbo.GetHashKey

Then you need to run the following script to create stored proc to generate hashKey.

USE [DemoSQL2012]

GO

CREATE PROCEDURE [dbo].[GetHashKey] ( @pSchemaName sysname,@pTableName sysname,@pDebug INT = NULL )

/************************************************************************************************************

Description: Populates hashKey column of target table indicated by @pTableName parm, using SHA1 algorithm.

Here the hashkey column is called hashKey.

The concatenated string as inputs for Hashbytes function is in a format of col1$col2$col3.

Examples: exec [dbo].[GetHashKey] 'dbo','hashTest', 1 to get the concatenated string to be calculated by Hashbytes fcn.

exec [dbo].[GetHashKey] 'dbo','hashTest', 2 to get the sql to update hashKey columns.

*************************************************************************************************************/

AS

BEGIN

SET NOCOUNT ON

DECLARE @SchemaName VARCHAR(MAX)

DECLARE @TableName VARCHAR(MAX)

DECLARE @HashSql VARCHAR(MAX)

DECLARE @Sql VARCHAR(MAX) = ''

DECLARE @CRLF char(2) = CHAR(13)+CHAR(10)

SET @SchemaName = @pSchemaName

SET @TableName = @pTableName

--- Concatenate strings for Hashbyte functions by INFORMATION_SCHEMA

SELECT @HashSql = COALESCE(@HashSql + '+ ', '')

+ 'ISNULL(CAST('+ QUOTENAME(COLUMN_NAME)+' as varchar),''~'')+''$'''+@CRLF

FROM INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_NAME = @TableName

AND TABLE_SCHEMA = @SchemaName

AND column_name NOT LIKE 'hash%'

SET @sql = @sql + 'DECLARE @updateChunk int = 4000000;

WHILE 1=1

BEGIN

'

SET @sql = @sql + 'UPDATE Top(@updateChunk) '+ @SchemaName + '.' + @TableName + ' ' + @CRLF

SET @sql = @sql + 'SET hashKey = ' + @CRLF

SET @sql = @sql + 'HashBytes(''SHA1'','+ @HashSql + ')'+@CRLF

SET @sql = @sql + 'WHERE hashKey is null;

IF @@RowCount=0

BREAK;

END'

IF (@pDebug = 1)

BEGIN

DECLARE @sqlStr2Hash VARCHAR(MAX) = 'SELECT ' + @HashSql + ' as hashCol FROM ' + @SchemaName+'.' + @TableName;

EXEC (@sqlStr2Hash)

END

ELSE

BEGIN

IF (@pDebug = 2)

SELECT @sql;

ELSE

EXEC (@sql);

END

END

GO

Here we use SHA1 algorithms to be Hashbyte functions. According to the article “Exploring Hash Functions in SQL Server”, SHA1 is one of the reliable algorithms with “best spread over the hash space”. We use metadata INFORMATION_SCHEMA.COLUMNS to obtain all columns with given table names.

How to use Stored Proc dbo.GetHashKey?

To call this stored procedure we would execute it as follows:

exec [dbo].[GetHashKey] 'dbo','hashTest', 1

It will show you the concatenated string for each row.

If you call stored procedure with @pDebug set as 2, you will get the sql scripts to update the hashKey column.

exec [dbo].[GetHashKey] 'dbo','hashTest', 2

If you want to update the hashKey column, call the stored procedure as follows:

exec [dbo].[GetHashKey] 'dbo','hashTest'

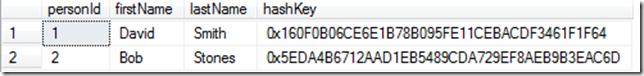

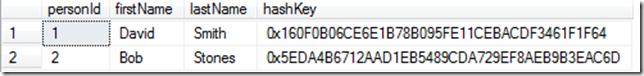

Now you can see the table is updated with hashKey:

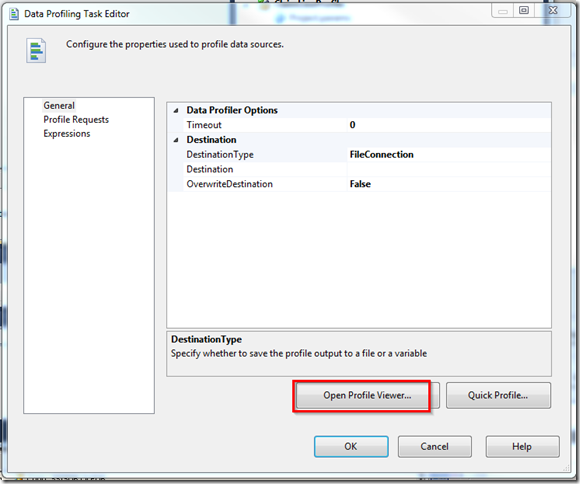

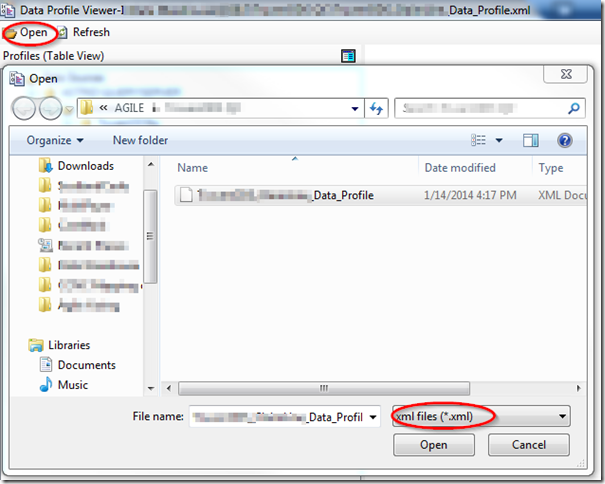

If you would like to use this stored proc in SSIS packages, you can directly use Execute SQL Task with schema name and table name passed by as parameters.

Wrap up

You can directly call the above stored procedure to speed up calculation of hashKey columns for different tables when loading to data warehouse. The inputs are mainly the schema name and table name. With pDebug parameters, it would be easy to debug your hash strings or sql scripts.

Also please keep in mind that the hashbyte functions do have an input limit of 8000 bytes. For those extremely fat tables, you can lean on SQL Server's CLR integration and perform the hashing in a .NET assembly.